To manage a vCenter Server Appliance (VCSA) there’s a special user interface called Virtual Appliance Management Infrastructure (VAMI). You can access it by entering the FQDN of your vCenter into a browser, followed by port 5480.

https://<mycenterFQDN>:5480

This UI helps you to monitor your vCenter Virtual Appliance. You can change basic settings, apply updates and watch system status.

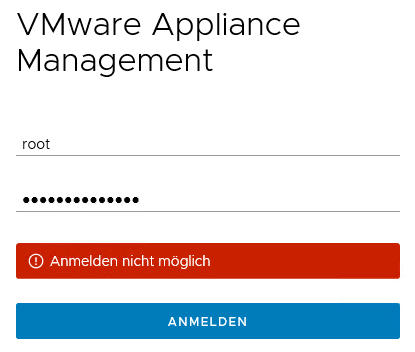

After updating a VCSA to the latest version of vCenter, I had to reboot the appliance. After the reboot process had finished, I wasn’t able to login to VAMI. Sometimes services need quite some time to become available, but even waiting for a longer period of time (a cup of coffee) nothing happened. VAMI presented a login screen, so obviously the webserver was up and running. But each time I entered the (correct) credentials I got the message “unable to login”. Screenshot is in German but you’ll most likely get the point ;-).