Okay, admit it, this is not a new topic, but it cost me some time in a client project. Since this blog also acts as a swap partition of my brain, I wrote it down for future reference. It is important to follow the steps correctly so that the changes are preserved after a reboot.

Why a Coredump-File?

Modern ESXi installations starting with version 7 use a new partition layout of the boot device. Coredumps are also located there. But only when the boot medium is not a USB flash medium and not an SD card. In such cases the coredump is relocated to a VMFS datastore with at least 32GB capacity.

This is exactly the case I found in a customer environment. The system was migrated from vSphere 6.7 and therefore still had the old boot layout on a ( at that time still fully supported) SD-Card RAID1. We found a vmkdump folder with files for each host on one of the shared VMFS datastores. This (VMFS5) datastore was supposed to be decommissioned and replaced with a VMFS6 datastore. (Side note from the VCI: there is no online migration path from VMFS5 to VMFS6) 😉 So the vmkdump files had to be removed from there.

Procedure

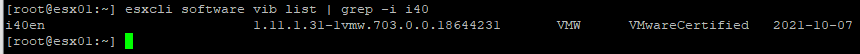

First, we get an inventory of the coredump files.

esxcli system coredump file list

All coredump files of all ESXi hosts are listed here. Each line contains the path and the Active and Configured (true or false) states. Active means that this is the current coredump file of this host. It is important that the value for Configured also has the status ‘true’. Otherwise the setting will not survive a reboot. Only the coredump file of the current host has the status ‘active’. All other files belong to other hosts and are therefore active=false.

By default, the host chooses the first matching VMFS datastore. This is not necessarily the desired one.

Remove the current Coredump-File

First we delete the active coredump file of the host. We have to force the removal because it is set as active=true.

esxcli system coredump file remove --force

If we execute the list command from above again, there should be one line less.

Add a new Coredump File

The next command creates a new coredump file at the destination. If it does not already exist, a vmkdump folder is created and the dumpfile is created in it. We specify the desired file name without extension, because it will be created automatically (.dumpfile).

esxcli system coredump file add -d <Name | UUID> -f <filename>

Example: Name of the host is “ESX-01” and the VMFS datastore has the name “Service”. The datastore may be specified as either DisplayName or Datastore_UUID.

esxcli system coredump file add -d Service -f ESX-01

A folder vmkdump will be created on the designated datastore and a file named ESX-01.dumpfile will be created in it. We can check this using the list command.

esxcli system coredump file list

A new line will appear with the full path to the new dumpfile. However, the status is still active=false and configured=false. It might be useful to copy this full path to the clipboard, because it is required in the next step.

Activate Dumpfile

In the following step, we set the created dumpfile to active. This way, the setting is retained even after a host reboot. We specify the complete path to the dumpfile. The copy from the clipboard is helpful here and avoids typos.

esxcli system coredump file set -p <path_to_dumpfile>

Example:

esxcli system coredump file set -p /vmfs/volumes/<UUID>/vmkdump/ESX-01.dumpfile

A final List command validates the result.

Links

- VMware Documentation – Deactivate and Delete a Core Dump File

- VMware Documentation – Set Up a File as Core Dump Location