Originally, this article was supposed to be called “The role paradox”. On further reflection, I came to the conclusion that this is not a paradox in the true sense of the word. The vCenter is just doing its job.

Authorizations under vSphere are basically simple (as long as we do not want to use restricted authorizations). If we are a member of the administrator group and have unrestricted access to all objects in the data center, privileges and roles are quickly explained.

Definition of terms

A privilege is the smallest unit. It allows the execution of a very specific action.

A role is a collection of privileges. The administrator role contains all available privileges. The no-access role, on the other hand, does not contain any privileges. “No access” is not to be understood here as an explicit denial, but as a lack of privileges. What may initially seem like a semantic quibble is an important difference to other authorization concepts such as Active Directory.

Missing privillege != denial

A permission is always made up of three components: A vSphere object, a role and a user or user group. A user (or a group) can have different roles on different objects. Permissions on objects can be propagated to child objects.

The challenge

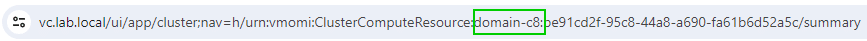

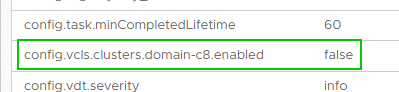

Things get interesting when I assign rights globally, but then want to restrict them to certain objects.

Example: The administrators group should have access to all objects, with the exception of some VMs in a defined VM folder. Sounds simple – but it’s not.

I became aware of the problem described here through my colleague Alexei Prozorov, who came across this phenomenon in a customer project. The topic was so interesting that I had to recreate it in the laboratory.

Continue reading “A deeper look into vSphere roles and privileges”