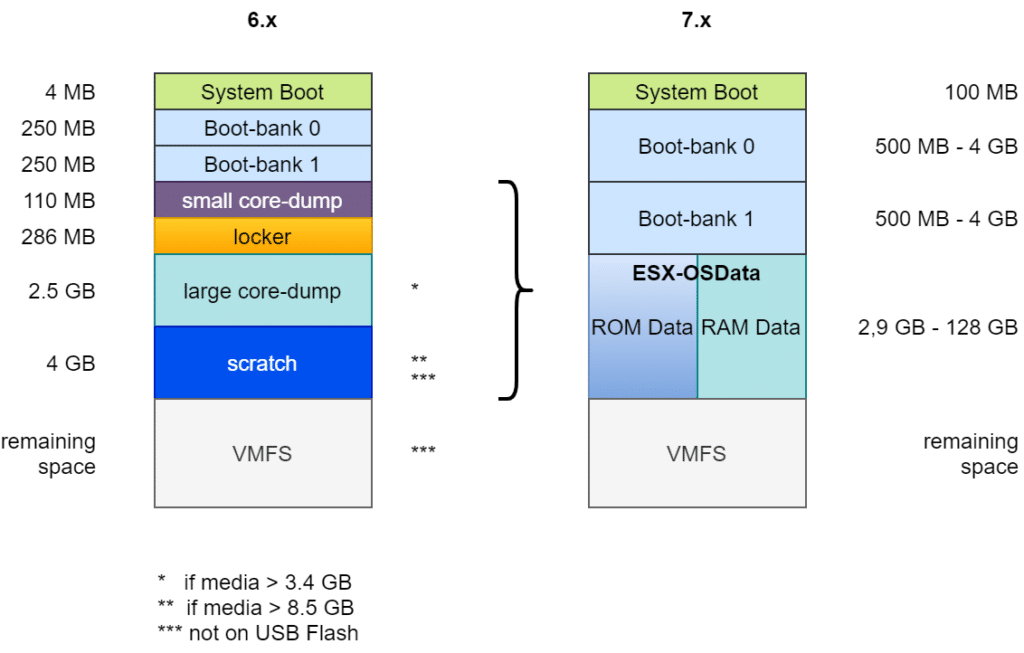

The requirements for ESXi boot media have changed fundamentally with ESXi v7 Update3. Partitioning has been changed and the requirements for the load capacity of the medium have also increased. I covered this in my blog article “ESXi Bootmedia – New features in v7 und legacy issues from the past v6.x“.

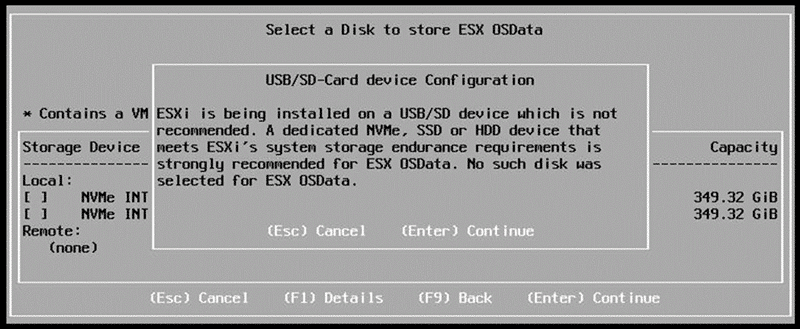

USB boot media did not turn out to be robust enough and were therefore no longer supported from v7U3 onwards. It is still possible to install ESXi on USB media, but the ESX-OSData partition needs to be redirected to permanent storage.

Warning! USB media and SD cards should not be used for production ESXi installations!

Valid setup targets for ESXi deployments

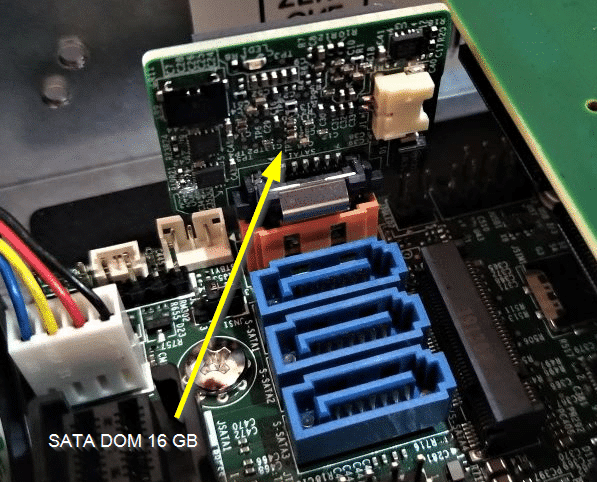

SD cards and USB media are unsuitable as installation targets due to their poor write endurance. Magnetic discs, SSDs and SATA DOMs (disc-on-modules) are still permitted and recommended.

New requirements from version ESXi v8 onwards

My Homelab previously used vSAN 7 and thus the classic OSA architecture. To run the cluster under the new vSAN ESA architecture, it was necessary to use vSphere 8 and new storage devices.

I tested the installation and hardware compatibility on a 64 GB USB medium (not recommended and not supported!). During the installation, there were warnings regarding the USB medium as expected. Nevertheless, I was able to successfully test the detection of the NVMe devices and the vCenter deployment.

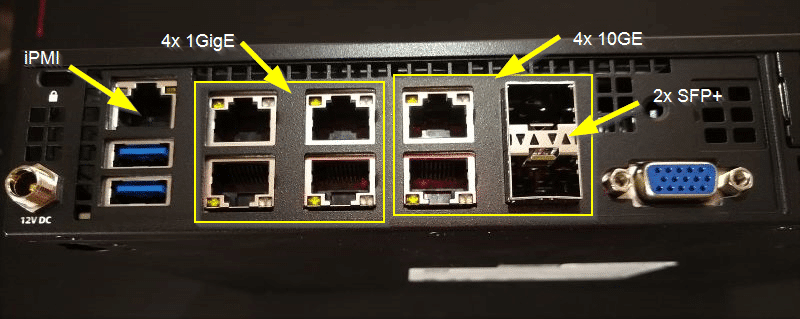

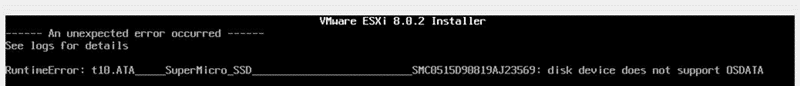

Having successfully completed the test phase, I installed ESXi 8U2 on the SATA DOM of my Supermicro E300 server. To my surprise, the setup failed at a very early stage with the message: “disk device does not support OSDATA“.

RTFM

The explaination is simple: “Read the fine manual!”

My 16 GB SATA DOM from Supermicro was simply too small.

The setup guide for ESXi 8 clearly states the requirements under “Storage Requirements for ESXi 8.0 Installation or Upgrade“:

For best performance of an ESXi 8.0 installation, use a persistent storage device that is a minimum of 32 GB for boot devices. Upgrading to ESXi 8.0 requires a boot device that is a minimum of 8 GB. When booting from a local disk, SAN or iSCSI LUN, at least a 32 GB disk is required to allow for the creation of system storage volumes, which include a boot partition, boot banks, and a VMFS-L based ESX-OSData volume. The ESX-OSData volume takes on the role of the legacy /scratch partition, locker partition for VMware Tools, and core dump destination.

VMware vSphere product doumentation

In other words: New installations will require a boot medium of at least 32 GB (128 GB recommended) and upgrading from an ESXi v7 version will require at least 8 GB, but the OSData partition of this installation must already be redirected to an alternate storage device.

Dirty Trick?

Needless to say, I tried a dirty trick. I first successfully installed an ESXi 7U3 on the 16 GB SATA DOM and then performed an upgrade installation to v8U2. This attempt also failed, as the OSData area was not redirected in the fresh v7 installation.

I don’t want to install on USB media as I have seen too many cases where these devices have failed. The only option is to invest in a larger SATA DOM.

I opted for the 64 GB model because it is a good compromise between minimum requirements and cost-effectiveness.