Admission control is part of vSphere High Availability (HA). It enforces and ensures availability in case of host failures. It guarantees that there is enough cluster capacity (memory or CPU) left for a HA failover by preventing VM power on actions that would violate that guarantee.

Since vSphere 6.5 there’s a dynamic calculation of minimum required resources, depending on your host number and host failures you want to tolerate.

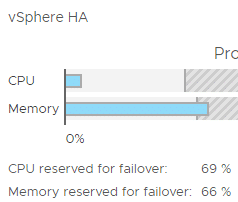

Let’s start with an example: A cluster got two equal hosts and should tolerate one host failure. Admission control will make sure that neither CPU, nor memory load will exceed 50% of your total resources. If you lose one host there will be enough resources to restart VMs on the remaining host.

Let’s imagine you’re adding another two hosts to the cluster. The number of host failures to tolerate is still 1, but now dynamic resource calculation kicks in. With now four hosts, admission control will allow you to fill up the cluster to 75% before it will prevent VM power on.

That’s great. Because you just have to define your desired number of host failures to tolerate and HA admission control will dynamically calculate the allowed percentage of cluster resources to use. It works for adding and removing hosts likewise.

Continue reading “HA dynamic admission control – bug or feature?”