This will be a multi-part post focused on the VMware Bitfusion product. I will give an introduction to the technology, how to set up a Bitfusion server and how to use its services from Kubernetes pods.

- Part 1 : A primer to Bitfusion (this article)

- Part 2 : Bitfusion server setup

- Part 3 : Using Bitfusion from Kubernetes pods

What is Bitfusion?

In August 2019, VMware acquired BitFusion, a leader in GPU virtualization. Bitfusion provides a software platform that decouples specific physical resources from compute servers. It is not designed for graphics rendering, but rather for machine learning (ML) and artificial intelligence (AI). Bitfusion systems (client and server) only run on selected Linux platforms as of today and support ML applications such as TensorFlow.

Why are GPUs so important for ML/AI applications?

Processors (Central Processing Unit / CPU) in current systems are optimized to process serial tasks in the shortest possible time and to switch quickly between tasks. GPUs (Graphics Processor Units), on the other hand, can process a large number of computing operations in parallel. The original intended application is in the name of the GPU. The CPU was to be offloaded by GPU in graphics rendering by outsourcing all rendering and polygon calculations to the GPU. In the mid-90s, some 3D games could still choose to render with CPU or GPU. Even then, it was a difference like night and day. GPU could calculate the necessary polygon calculations much faster and smoother.

A fine comparison of GPU and CPU architecture is described by Niels Hagoort in his blog post “Exploring the GPU Architecture“.

However, due to their architecture, GPUs are not only ideal for graphics applications, but for all applications where a very large number of arithmetic operations have to be executed in parallel. This includes blockchain, ML, AI and any kind of data analysis (number crunching).

What’s the concept behind Bitfusion?

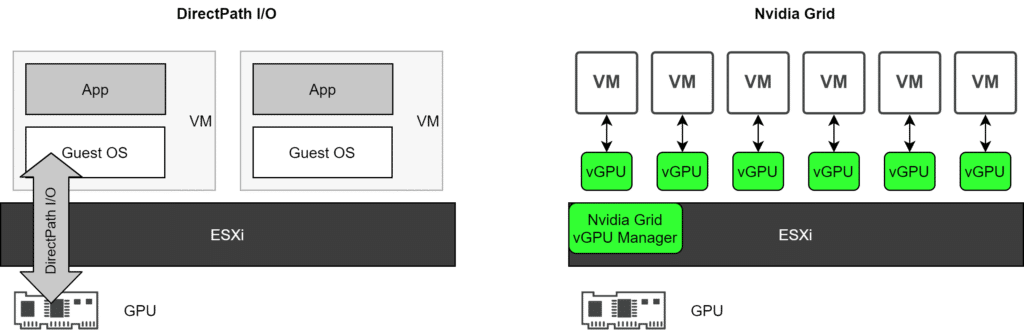

The concept of using physical GPU resources from virtual machines is not new. In previous approaches, the consuming VM had to be on a host system that had a physical GPU resource itself. The GPU could then be used either exclusively by DirectPath I/O, or by Nvidia Grid.

DirectPath I/O provides a PCI resource such as a graphics card (GPU) exclusively to a VM. The GPU cannot be shared with other VMs. Migration of this VM to other hosts (vMotion) is not possible.

Nvidia Grid splits the physical GPU into multiple virtual vGPUs, which can then be used by multiple VMs on the same host.

Both solutions have one thing in common: All VMs that use GPU resources must be located on the same host that has the physical GPU resource. This has implications for cluster design. Either I can run VMs that consume GPU only on selected ESXi hosts, or I must provide all compute hosts with appropriate graphics hardware. GPU and compute are always coupled.

The idea behind Bitfusion

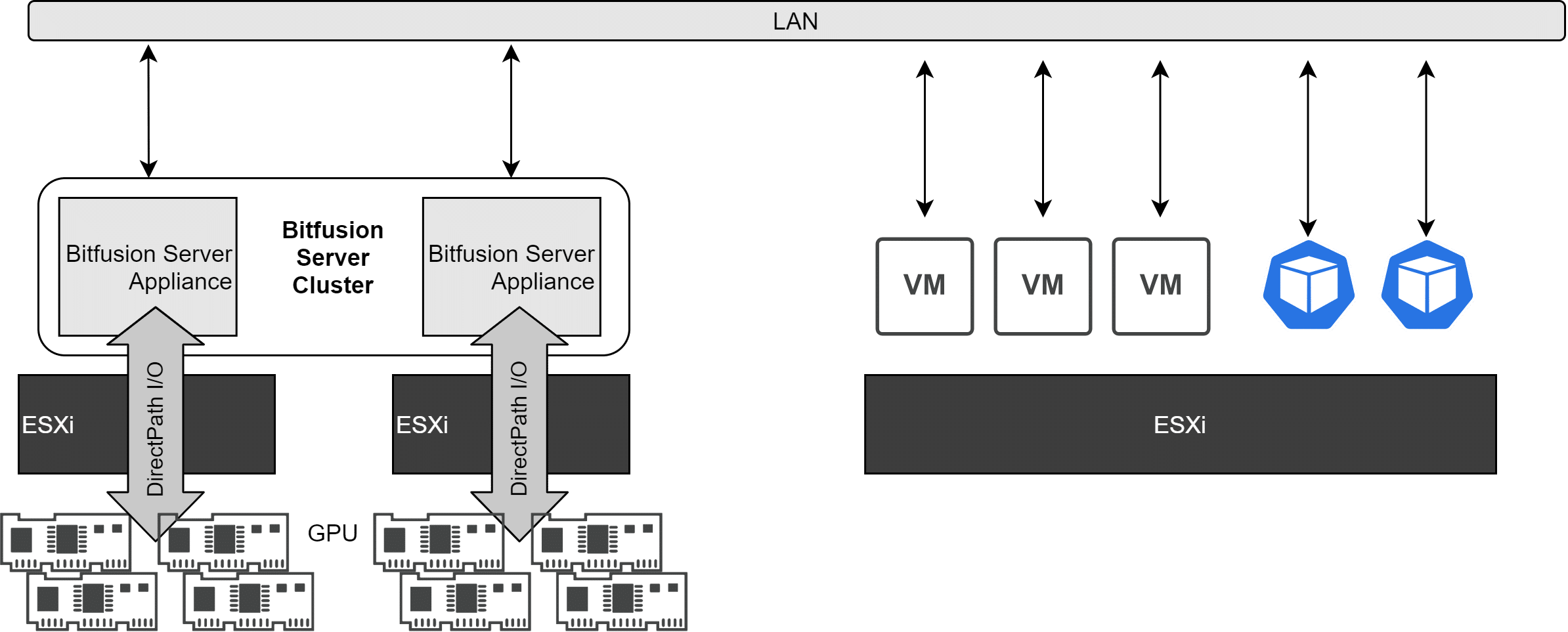

VMware Bitfusion decouples physical GPU resources from consumers by providing physical GPU resources as a pool on the network. Consumers (VMs, containers or bare-metal servers) no longer need to run on the same hardware as the GPU.

Applications can share physical GPU resources that are no longer exclusively allocated to individual VMs. Applications can run on specially prepared VMs, containers, or physical systems. They use GPU compute power from a pool of Bitfusion servers over the LAN, claiming resources only for the duration of an application or session’s execution. The GPU resources are returned to the pool as soon as the respective application or sessions are finished.

The core of a Bitfusion cluster is the Bitfusion Server Appliance. It receives GPU resources from the ESXi host, which are provided to it via DirectPath I/O. One or more appliances are provisioned per ESXi host and multiple appliances can be connected to form a Bitfusion cluster. The number of physical GPU per appliance determines how many resources a client can book simultaneously, Currently, a process can only address one Bitfusion server at a time. The Bitfusion Cluster controls the allocation of resources.

- Bitfusion Server: Provides GPU compute resources over the network

- Bitfusion Clients: Consume GPU processing resources from the Bitfusion server. Work packets are transmitted to the Bitfusion server over LAN and the results are in turn sent back to the clients over LAN. The clients write directly to the GPU RAM. Thus, when GPU resources are booked, it is not GPU processing time that is requested, but rather shares of GPU memory.

Client applications transfer program code to the physical GPU via the CUDA API. CUDA is a programming language developed by Nvidia in which parts of the program code are executed on the GPU. The GPU thus acts as a coprocessor of the CPU.

CUDA supports Nvidia graphics cards from version GeForce 8 and Quadro cards from series FX 5600.

Read on

- Part 1 : A primer to Bitfusion (this post)

- Part 2 : Bitfusion server setup

- Part 3 : Using Bitfusion from Kubernetes pods