Recently I’ve upgraded my homelab from 6.7U3 to vSphere7. The workflow is straightforward and very easy. The VMware Design team did a very good job with the UI.

First steps

I cannot point that out enough: check the VMware HCL. Just because your system is supported under your current vSphere version, doesn’t mean it’ll be supported under vSphere7 too. On the day I’ve upgraded, vSphere7 was brand new and there were just a few entries in the HCL. But it’s a homelab and if something breaks I don’t care to rebuild it from scratch. Don’t do this in production!

Although my Supermicro E300-9D is not yet certified for version 7.0, it works like a charm. I guess it’s just a matter of time, because the VMware Nano-Edge cluster is based on that hardware.

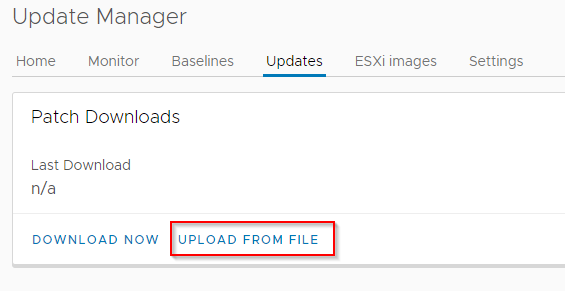

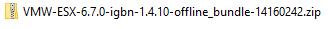

Before we can start, you need to download the vCenter Server Appliance 7.0 (VCSA) from VMware downloads (Login required). You also need to have new license keys for vCenter, ESXi and vSAN (if yor cluster is hyperconverged).

Continue reading “Upgrade vCenter Server Appliance to vSphere 7”